JHU/APL Wins Award for Innovation (And I Had a Tiny Part in It!)

Monday September 30, 2019

This morning, I got into the office to see JHU/APL won an Impact Award from Innovation Leader magazine. That’s awesome, but what was cooler was seeing my project listed as part of the reason why. From the article,

The first part of the HELP Challenge involved a “data call” for relevant staff skills. The second part involved a micro-grant mechanism to enable staffers to modify existing technologies or create dual-use technologies that might be applicable in disaster or rescue scenarios, similar to the cave situation in Thailand. The challenge resulted in 153 staff sharing relevant technical skills that might not be on their traditional résumés, and 50 ideas submitted. Eleven of the ideas were funded for a short-timeframe exploration of their potential.

Among those ideas: leveraging artificial intelligence to create more effective flu vaccines; low-cost air quality sensors; and using virtual reality to see what a physical space looked like before the disaster struck. “This program is now prepared to respond to a disaster and leverage APL’s skills and capabilities,” according to the award submission. Additionally, “some of the technologies are being spun in to customer-funded projects.”

My project was the virtual reality platform mentioned. After a disaster, buildings that once stood are gone and nothing more than debris remains. Tornados, floods, explosions, and other mass casualty events leave a minimum of time to get to victims and even less to get them help. However, Google has mapped the entire nation, providing street-level views of points across the United States. Further, local jurisdictions typically require architectural and engineering drawings as part of the permitting process.

We can use this collected data to create virtual 3D maps of the world as it was before the disaster struck. This information can be displayed in an AR system, like the Microsoft HoloLens, creating a heads-up display of the way things ought to be. This view can assist first responders and rescuers, showing them where parts of buildings once stood, what form structures took, and other metadata collectible from public data sources. This metadata might include altitude, locations of power lines and underground pipelines, or information as elementary as telephone data from the phone book. Having this information may also help rescuers determine where victims might actually be to expedite rescue while helping them avoid hazards such as gas and electrical mains.

For a proof of concept, we took advantage of the construction of a new building on our campus at JHU/APL. The new building, B201, is a milestone for the campus and has generated much interest. Accordingly, there was already a three-dimensional representational model of B201 developed from the building architectural drawings. The model, imported into AutoDesk Revit CAD, had been used for creating virtual reality (VR) walkthroughs and creating dynamic displays of how the internal aspects of the building may be configured. You can see a video from “inside” the heads-up display here:

Augmented Reality / B201 Example

After a disaster, buildings that once stood are gone and nothing more than debris remains. Tornados, floods, explosions, and other mass casualty events leave...

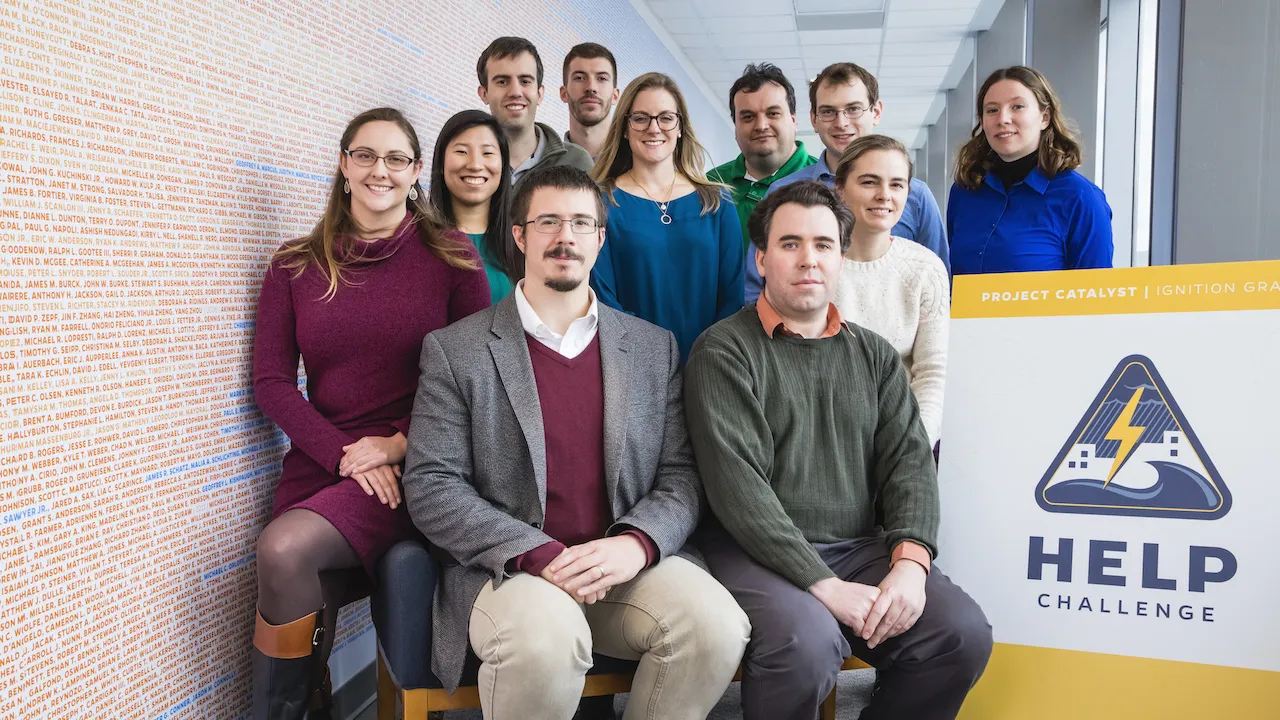

This is really awesome stuff we’ll have a forthcoming article in the Johns Hopkins APL Technical Digest. We also presented at the 2019 xR Symposium, hosted at APL back in July. Also, I need to say, I just had an idea. The development team really made this happen, and they were great:

I should get links for all of them! Finally, you can read more about the program and the award on APL’s website:

Johns Hopkins APL Receives 2019 Impact Award from Innovation Leader

The Johns Hopkins Applied Physics Laboratory (APL) in Laurel, Maryland, has received a 2019 Impact Award from Innovation Leader for an internal initiative called the Ignition Grants HELP Challenge.

Image courtesy of the Johns Hopkins Applied Physics Laboratory.