The Case for RISC-V

Monday January 30, 2023

Computer users have long suffered from a diversity of hardware. While many different types of computers have been available since the start of the industry, this has posed a problem for consumers and businesses buying computer equipment, as software may not necessarily run on a new system. This increases the cost of transitioning and leads to “vendor lock-in,” the phenomenon where customers are committed to a particular supplier.

Recently, an open systems architecture has emerged in the form of RISC-V. RISC-V is a modern RISC architecture available in both 32-bit and 64-bit varieties, meeting modern computer users’ needs, as well as the needs of the embedded market. RISC-V also has a strong pedigree, having been developed in the same milieu that developed the SPARC architecture. The architecture itself is controlled by the RISC-V International organization, with more than 3100 members across 70 countries. The architecture is available for free for anyone to implement and has been fixed by RISC-V International, along with numerous specialized computing extensions. Accordingly, RISC-V presents a unique opportunity for global standardization on a single, vendor-neutral hardware architecture, benefiting consumers and businesses.

Following this introduction, this paper will present a very brief history of different computing architectures demonstrating the long-standing problem of diversity. Then this paper will explore the value of open standards. This paper will then show how RISC-V, as an open standard, meets the value proposition. Then this paper will provide a conclusion and offer some suggestions for how public policy can be used to advance the objective of open standards in the case of processor architecture.

Ever since the second computer was built, users have had to contend with different and incompatible architectures. Early computers, like the Harvard Mark I, the Zuse Z3, ENIAC, and many others, were custom-built, often to solve custom problems. These systems were often “programmed” by reconfiguring the physical infrastructure of the system. Under these early conditions, every system was just as much an experiment as it was a tool. Each of these early machines was also unique. Software was barely an idea at the time, so the concept of moving software between independent systems was not even considered.

It was only a decade later that computer companies were selling identical copies of systems that could run the same software, at the same time software was becoming an independent idea. Companies like IBM, RCA, and Royal McBee were selling computers that could share software with other installations, albeit not with each other. By 1970, Digital Equipment Corporation (DEC) were selling computers from its PDP line, including the wildly popular PDP-8 and PDP-11 systems. Despite the naming, the PDP-8 and PDP-11 systems were not compatible with each other, though the PDP-8 was binary compatible with the PDP-12. As computers moved to the desktop, DEC continued producing new systems using a variety of architectures and were soon joined by different architectures from Sun Microsystems, Silicon Graphics Inc. (SGI), Hewlett-Packard, and many other vendors.

As computers entered the consumer market, things were only marginally better. In the earliest days of home computing, two architectures dominated the market, Intel Corporation’s 8080 and MOS Technology, Inc.’s 6502. Derivatives and semi-compatible systems were available for each, especially Zilog, Inc.’s Z80, which was an extension of the 8080 platform. In 1981, IBM released the IBM Personal Computer, based on Intel’s 8086 processor architecture. The 8086 is the direct ancestor of modern computers from most vendors and is the basis for the modern x86-64 architecture, which maintains a substantial degree of backward compatibility. Apple, having built their earliest systems on the 6502 architecture, introduced the Macintosh based on Motorola, Inc.’s 68000 architecture in 1984, but switched to the PowerPC architecture, jointly developed by Apple, IBM, and Motorola ten years later. Apple would switch twice more in 2006 to Intel’s x86-64 architecture and again in 2020 to the ARM architecture, which itself has been around since the 1980s, and has long dominated the embedded market.

While the history presented here has been simplified out of necessity, at least a dozen different process architectures have been referred to either directly or indirectly. Many others existed in over this period. This is important because while architecture can drive factors like processor speed, capabilities, and memory access patterns, it often means that different architectures lead to different instruction sets. That means software written for one architecture will not run on another, absent emulation or some sort of hardware specialization. This matters for consumers and businesses alike as it means applications cannot be run and must be replaced when switching systems. With only two dominant architectures today, x86-64 and ARM, this risk is de minimis. However, the steady advancement of technology is likely to lead to new architectures or incompatible extensions developing for those native architectures as time goes on.

We start the discussion by first discussing what an open-source standard is. Tiemann addresses this and attempts to provide a definition and create a four-level standard. Of most interest here is the base-level standard:

The standard is documented and can be completely implemented, used, and distributed royalty free…Implementations of the standard may be extended, or offered in subset form. However, certification organizations may decline to certify subset implementations, and may require that extensions also satisfy the criteria of an Open Standard. Anything less than this is not an Open Standard, period.

Higher levels of the standard refer to software interoperability and may not necessarily apply to RISC-V. Accepting this as the base definition, understanding the benefits is critical. We identify three key benefits.

The first key benefit is that an open standard is less likely to become obsolete. Many closed systems are replaced for reasons that are less technical and may be based on business decisions, leading to accusations of “planned obsolescence.” An open standard, without ownership by a single business entity to benefit from the obsolescence, should only be changed for technical reasons. Further, if the standard were developed openly by many independent contributors, possible needs would be identified earlier, reducing the need for technological advancement.

The second key benefit is the lower barrier to entry for market participants. Defining interfaces, capabilities, and system mechanics is a substantial portion of the cost of research and development. By leveraging the work of open standards, that portion of the research and development cost is effectively eliminated. This gives market participants the ability to enter with lower capital requirements. It also lowers the cost of innovation as the open standard can be embedded as part of a larger new innovation without the expense of relying on proprietary technology.

The third key benefit is the assurance of interoperability, the raison d’etre of this analysis. This is seen most prominently in the definition of TCP/IP, the standards for Internet communication. With open standards and open implementations of TCP/IP available, TCP/IP has left behind many alternatives used for communication for the decades. One of the benefits that occur here is the self-reinforcing monopoly that grows from network benefits. That is, as more participants use the standard, others rely on the standard, increasing the number of participants even further. While often viewed negatively, this can be seen as an advantage when the putative monopolist is an independent consortium, and no single supplier is the monopolist.

With those benefits understood, we now want to understand how they apply to RISC-V. First, we must understand that RISC-V meets our requirements as an open standard. The definition of RISC-V is itself documented, including the instruction set architecture, binary interfaces, and assembly mnemonics. The documentation is available freely from the RISC-V International organization. The definition does not require any purchase and can be downloaded without registering. RISC-V also requires no royalty payments to implement the standard. The only restrictions are that implementors must be a member of the RISC-V International organization if they wish to use any of the RISC-V International trademarks in their marketing materials.

The first benefit to consider is the lack of obsolescence. The RISC-V standard is provided in two key forms today, a standard for 32-bit computing and a standard for 64-bit computing. While the market for alternatives to 32-bit and 64-bit computing exists, 32-bit and 64-bit computing make up essentially all computers in both business and the home, as well as many embedded applications. RISC-V meets the primary need here and provides all of the functionality necessary in both forms. Further, in a future push toward 96-bit or 128-bit computing, RISC-V can be extended, and initial work toward the development of a 128-bit RISC-V standard has begun. Regardless, even as consumers move toward more powerful computing, existing 32-bit and 64-bit computing systems will still be used, and RISC-V meets that requirement.

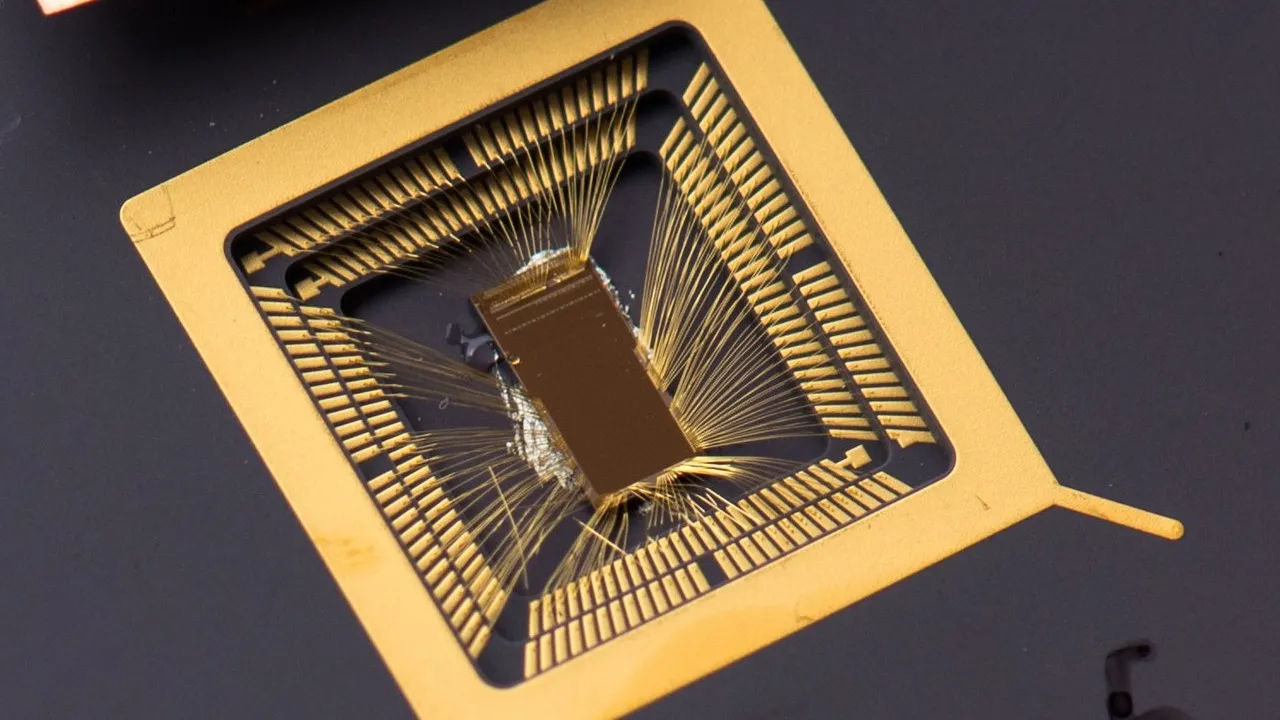

The second benefit is the lower barrier to entry for market participants. This is aptly demonstrated by SiFive, Inc. which has become a prominent supplier of RISC-V products in the embedded and System-on-Chip (SOC) market. SiFive has grown into a 2.5 billion dollar corporation in just seven years, being founded in 2015. By building on the existing standard, SiFive has created intellectual property (IP) for implementations of RISC-V. In another instance, it is breathing new life into older businesses. MIPS Technologies, Inc. designed and licensed the MIPS architecture to numerous companies, including SGI and DEC. However, the company has begun designing new processor core IP around the RISC-V architecture. This would not have been possible for MIPS Technologies absent an open standard.

The final benefit we want to consider is interoperability. Because the architecture is uniform across vendors, consumers and businesses purchasing RISC-V equipment can be assured that software that works on one hardware component will work on another. In many ways, this echoes what consumers and businesses encountered in the late 1980s on with what has been called PC-compatible systems. Computers from companies like Dell, Compaq, and others could run the same software. However, different implementations of the 8086-based architecture occasionally led to interoperability difficulties. The RISC-V standard will limit this by ensuring the core of implementations meeting the standard are interoperable.

Together, we can see that adoption of RISC-V provides numerous benefits to users. It will also provide benefits to companies supplying RISC-V-based products. Due to the benefits and their relatively rapid accrual, there has already been a substantial move toward adoption for many use cases. However, governmental action can further adoption without favoring any specific technology. Public policy can be extended to support this development through governmental activity in the market.

Without requiring any specific action in the private market, governments can mandate the adoption of products that use open standards in government projects. In this regard, it is unnecessary to name RISC-V as the required standard. While RISC-V is the only substantial standard meeting the requirement today, developing other standards may equally well support the objective of a universal computing platform. This is essentially how TCP/IP became a universal communications protocol, through support from DARPA. We have also seen this pattern in software adoption as German governments have mandated specific requirements and promoted them against closed alternatives. In addition, like the TCP/IP example, governments can fund further development of projects that enable open hardware standards.

RISC-V provides a unique opportunity in the history of computing to achieve an essentially universal standard for products in business and the home. Consumers and businesses will benefit from this as their equipment becomes interchangeable at the hardware level. That said, there are still issues with interoperability as software, especially operating systems, will not necessarily be uniform. Regardless, this is a substantial step toward a universal computing platform.